Abstract:

The approximate logic neuron model (ALNM) is a single neural model with a dynamic dendritic structure. The ALNM uses a neural pruning function to eliminate unnecessary dendrite branches and synapses during training, but use of the backpropagation algorithm restricted the ALMN. A team including a researcher from Kanazawa University implemented a heuristic optimization method called the states of matter search (SMS) algorithm to train the ALMN, and produced superior performance in six benchmark classification problems.

Kanazawa, Japan – Artificial neural networks are machine learning systems that are composed of a large number of connected nodes called artificial neurons. Similar to the neurons in a biological brain, these artificial neurons are the primary basic units that are used to perform neural computations and solve problems. Advances in neurobiology have illustrated the important role played by dendritic cell structures in neural computation, and this has led to the development of artificial neuron models based on these structures.

The recently developed approximate logic neuron model (ALNM) is a single neural model that has a dynamic dendritic structure. The ALNM can use a neural pruning function to eliminate unnecessary dendrite branches and synapses during training to address a specific problem. The resulting simplified model can then be implemented in the form of a hardware logic circuit.

However, the well-known backpropagation (BP) algorithm that was used to train the ALMN actually restricted the neuron model’s computational capacity. “The BP algorithm was sensitive to initial values and could easily be trapped into local minima,” says corresponding author Yuki Todo of Kanazawa University’s Faculty of Electrical and Computer Engineering. “We therefore evaluated the capabilities of several heuristic optimization methods for training of the ALMN.”

After a series of experiments, the states of matter search (SMS) algorithm was selected as the most appropriate training method for the ALMN. Six benchmark classification problems were then used to evaluate the ALNM’s optimization performance when it was trained using the SMS as a learning algorithm, and the results showed that SMS provided superior training performance when compared with BP and the other heuristic algorithms in terms of both accuracy and convergence speed.

“A classifier based on the ALNM and SMS was also compared with several other popular classification methods,” states Associate Professor Todo, “and the statistical results verified this classifier’s superiority on these benchmark problems.”

During the training process, the ALNM simplified the neural models through synaptic pruning and dendritic pruning procedures, and the simplified structures were then substituted using logic circuits. These circuits also provided satisfactory classification accuracy for each of the benchmark problems. The ease of hardware implementation of these logic circuits suggests that future research will see the ALNM and SMS used to solve increasingly complex and high-dimensional real-world problems.

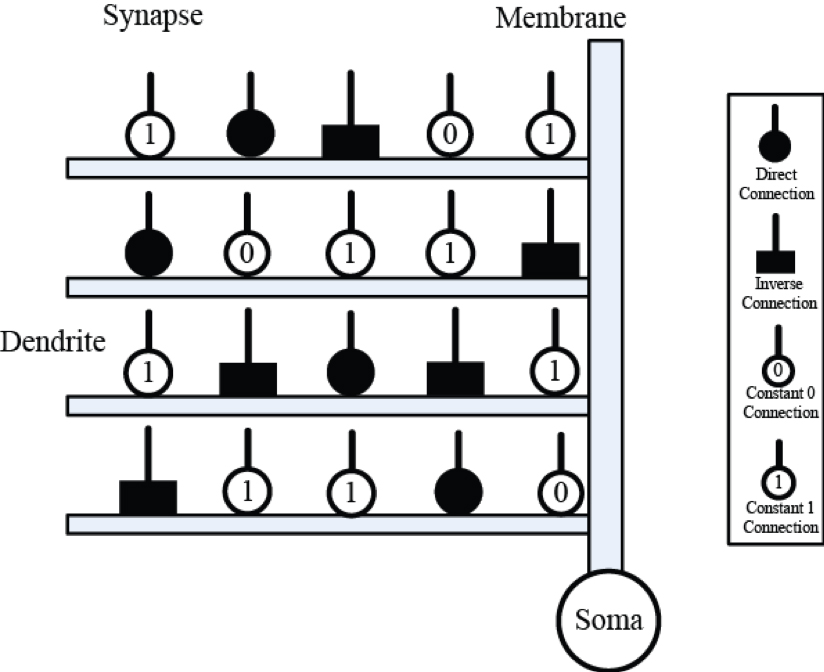

Figure 1. Structural morphology of ALNM with four branches of dendrites.

Axons of presynaptic neurons (input X) connect to branches of dendrites (horizontal rectangles) by synaptic layers; the membrane layer (vertical rectangles) sums the dendritic activations, and transfers the sum to the soma body (black sphere). Synaptic layers have four different connection cases, namely, the direct connection, the inverse connection, the constant 0 connection and the constant 1 connection.

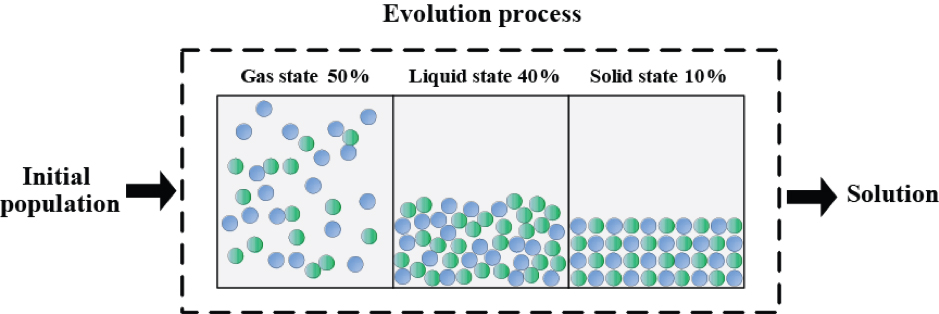

Figure 2. Evolution process of states of matter search (SMS).

Evolution process of states of matter search (SMS) are based on the physical principle of the thermal-energy motion ratio. The whole optimization process is divided into the following three phases: the gas state (50%), the liquid state (40%) and the solid state (10%). Each state has its own operations with different exploration-exploitation ratios. The gas state is a pure exploration at the beginning of the optimization process. The liquid state simultaneously possesses the exploration and exploitation searching, and the solid state focuses on the exploitation simply at the latter part of the optimization process. The algorithm optimized in this way can achieve a suitable balance between exploration and exploitation.

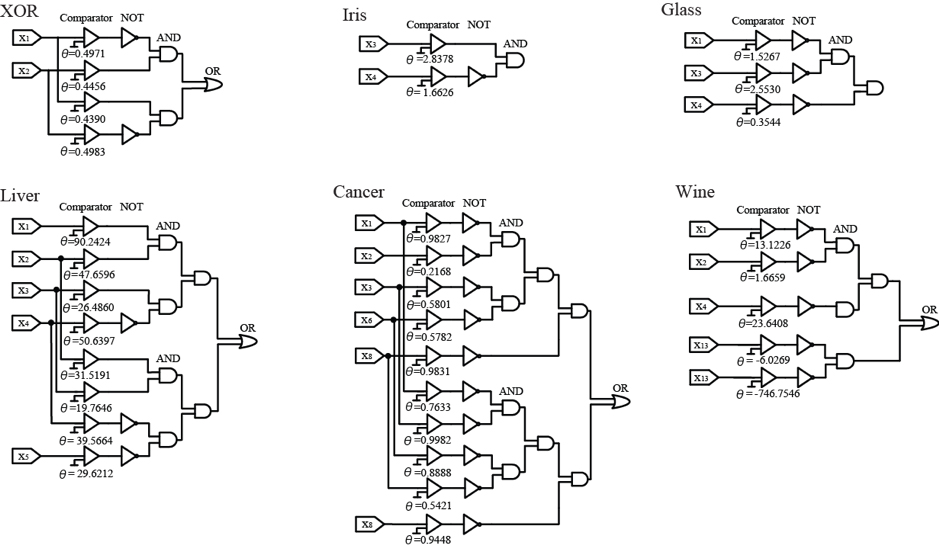

Figure 3. The logic circuits of six benchmark classification problems.

ALNM is capable of simplifying the neural models by synaptic pruning and dendritic pruning during the training process. Then, the simplified structures of ALNM can be substituted by logical circuits, which merely consists of the ‘comparators’ and logic NOT, AND and OR gates. Among them, the ‘comparator’ works as an analog-to-digital converter which compares the input with the threshold θ. If the input X exceeds the threshold θ, the ‘comparator’ will output 1. Otherwise, it will output 0. When implemented on hardware, these logical circuits can be adopted as efficient classifiers to solve the six benchmark problems.

Article

Approximate logic neuron model trained by states of matter search algorithm

Journal: Knowledge-Based Systems

Authors: Junkai Ji, Shuangbao Song, Yajiao Tang, Shangce Gao, Zheng Tang, Yuki Todo

DOI: 10.1016/j.knosys.2018.08.020

Funders

This research was partially supported by Hunan Provincial Status and Decision-making Advisory Research, China (No. 13C1165) and the Hunan Educational Bureau Research Item, China (No. 2014BZZ270).

PAGE TOP

PAGE TOP